Is AI art causing future shock or age-old economic anxiety?

The tech isn’t the problem. Our civic failure to grow along with it is.

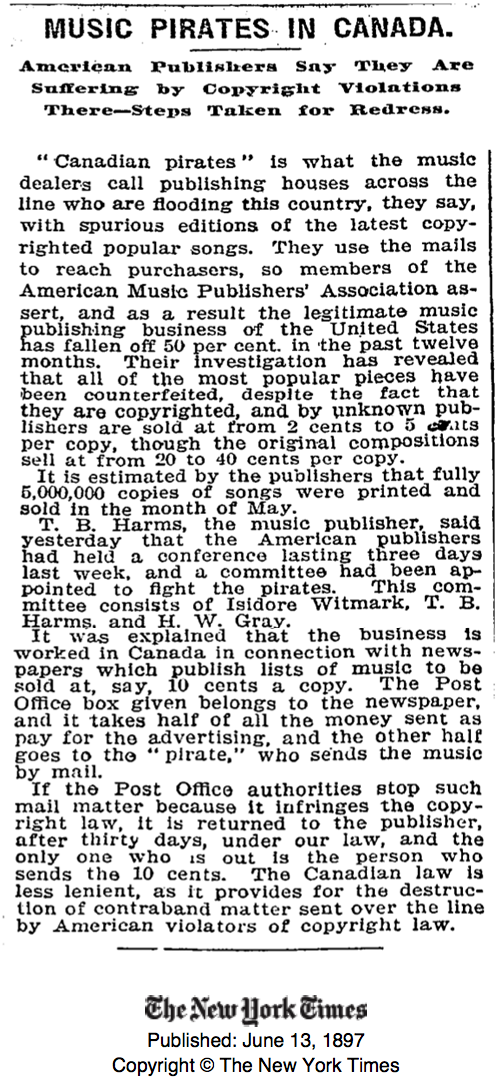

At the turn of the 20th century, Canadians were notorious pirates. You wouldn’t know it to look at us (well, minus all the stealing we’d done from Indigenous communities), but we were lean, mean, thieving machines… when it came to sheet music.

Remember, this was before TV, just as radio was getting off the ground. Film productions were novelty shorts in a world of vaudeville and live theatre, musicals, opera, and dance. So how did one make money as a creator? Well, in part through royalties off live performance, and in part by selling the music directly, to a market of households that usually had at least one person who could play an instrument or sing. But if someone figured out how to bypass purchasing sheet music, on a mass scale? Well, then they’d be affecting music publishers’ profits, and by extension the artists. How would creators survive, if no one was paying for their work?

Sheet music is still a niche industry today, but technology over the last 120 years has routinely required creators (and their capitalists) to rethink how they’ll get paid from making art. In the 1980s, blank audio cassettes gave us homemade mixtapes, and the music industry fought hard against the practice, while radio stations cut over song intros and endings to foil recording attempts. In the 2000s, file-sharing platforms like Napster became the scourge of most musicians, and a lot more of their income started to ride on concert and merchandise sales.

Other industries have struggled similarly. Do you remember Tron (1982), one of the world’s first films to use extensive CGI? Did you know it was disqualified from Oscar’s consideration in the special effects category, because CGI was considered “cheating”? That feeling quickly changed, but at first the new tech did seem to imperil the livelihoods of traditionally trained animators. So too, though, did the rise of cameras in the 1800s: photography pretty much gutted the market for portrait miniatures.

Which brings us to late August 2022, when Jason M Allen won first prize in the Colorado State Fair with an image, “Théâtre D’opéra Spatial”, generated by an artificial-intelligence tool called Midjourney. Yes, it was in an arts category specifically for “digitally manipulated photography”, competing against a little over a dozen other entries. No, that didn’t stop folks from crying foul, accusing Allen of fraud, and expressing deep concerns about the future of art in a world with Midjourney, DALL-E 2, Imagen, and other such programs capable of answering human prompts with images synthesized from immense libraries of visual content.

Are we in a moment of what Alvin Toffler and Adelaide Farrell called “future shock”—a period of cultural change far surpassing our ability to adapt to it?

Well, yes, in general, probably. (Thank you, escalating climate change events!)

But if it helps, in the case of AI art it’s a future shock we’ve dealt with before: a new technology or social capability threatening an already precarious industry, in a world with very little economic stability for most of its denizens.

And that knowledge gives us the power to answer our current shock differently. With an eye, that is, toward building a world more resilient to any new tech’s rise.

Cory Doctorow, and the next Luddite revolution

In January, prominent sci-fi writer, futurist, and anti-monopolist Cory Doctorow argued for the reclamation of a much-maligned resistance group from the early 1800s. “Science Fiction Is a Luddite Literature”, Doctorow wrote, because both sci-fi writers and the Luddites “concern themselves with the same questions: not merely what the technology does, but who it does it for and who it does it to.”

He went on to explain that the Luddites are incorrectly regarded as textile workers who destroyed new technology out of hatred for the technology itself. But why would they hate technology that offered to ease their labors? Technology that could also make the same fine textiles they spun for rich people affordable for their families, too?

The problem wasn’t the tech. The problem was the capitalists:

What were they fighting about? The social relations governing the use of the new machines. These new machines could have allowed the existing workforce to produce far more cloth, in far fewer hours, at a much lower price, while still paying these workers well (the lower per-unit cost of finished cloth would be offset by the higher sales volume, and that volume could be produced in fewer hours).

Instead, the owners of the factories—whose fortunes had been built on the labor of textile workers—chose to employ fewer workers, working the same long hours as before, at a lower rate than before, and pocketed the substantial savings.

There is nothing natural about this arrangement. A Martian watching the Industrial Revolution unfold through the eyepiece of a powerful telescope could not tell you why the dividends from these machines should favor factory owners, rather than factory workers.

Cory Doctorow, “Science Fiction Is a Luddite Literature”, Locus Magazine, January 3, 2022

Doctorow doesn’t just call on folks to be Luddites today; he points out that many of us are already Luddites, because we already engage with these questions of how best to ensure that new technology serves us all well. Not every sci-fi writer, of course, is engaged in that project—but enough are, and enough other policy wonks, politicians, community organizers, and activists, too, that we don’t even need to think of this as “starting” another revolution. This is what we humans do, and have always done.

We simply have to own up to this legacy of more constructive responses to new technology, so as not to cede future innovations to those who would use them to uphold an unjust status quo.

Yes, historically, people of greater means and hardier resources very often and quite devastatingly wielded new technology and its innovations against one another. But that was always a human choice, not an inevitable outcome.

Humanity’s long history of companion-tech

Much has been written about animal companions having been crucial to our species’ rise. The domestication of the dog, the harnessing of “beasts of burden”, the “breaking” of the horse, the keeping of herds and flocks: the history of human civilization has always been relational.

But while human folklore doesn’t fixate much on the fear of other-animal uprisings, we have a storied wealth of anxiety when it comes to subjugating one another. Whether along national, class, ethno-religious, racialized, or gendered lines, the threat of losing out to other groups emerges in all our literary traditions.

And how better to lose out, than to be behind on tech?

“And the Lord was with Judah; and he drave out the inhabitants of the mountain; but could not drive out the inhabitants of the valley, because they had chariots of iron.”

Judges 1:19, KJV

It is the law as in art, so in politics, that improvements ever prevail; and though fixed usages may be best for undisturbed communities, constant necessities of action must be accompanied by the constant improvement of methods. Thus it happens that the vast experience of Athens has carried her further than you on the path of innovation.

Thucydides, History of the Peloponnesian War

This is a trick question, though, because one first has to assume they’re “losing out”: to buy into technological progress as a mere arms race, a zero-sum game of wins and losses. That’s an easy narrative to fall for, and not just in our military histories. Jared Diamond’s pop-sci anthropological text, Guns, Germs, & Steel (1997), was highly popular because it presented a determinist argument for Western domination that suggests this historical outcome was guaranteed by geographical access to better and more abundant proteins and metals. Absent in this view of history is any agency on the part of the humans themselves. With access to better materials, the argument goes, their use to plunder, destroy, and oppress was inevitable.

But even though many animals also wield “tech” against one another, we can look to the rise of tool use elsewhere in the animal world and plainly see the much greater role for individual and mutual benefit in innovating: for food acquisition, for shelters, and for play. Our history likewise abounds in the use of technology to build better domestic infrastructure, craft more dazzling monuments and trinkets, entertain to pass the time, and ease the hardships of daily labor.

One more recent example also illustrates how much humans have long since been charmed by the idea of AI serving as helper-tools. ELIZA, a chatbox first developed in the 1960s, responded to user inputs with answers in the style of Rogerian psychotherapy. When its creators first tested ELIZA with average humans, the results yielded some of our first anxieties about a computer passing the Turing Test, in which a machine is made to convince a human that it is human, too.

But the anxiety wasn’t universal. In fact, people liked talking to ELIZA, even when they knew that “she” wasn’t real. ELIZA served to make people feel heard, and gave them an outlet to talk about their struggles. Decades later, our world is filled with robots who perform similar helper functions, to offset our growing lack of human companionship. In Japan, RI-MAN offers a friendly face and soft tactile experience while moving the elderly and patients in need, and Paro is a cuddly baby-seal robot who offers therapeutic companionship. Films like Her (2014), in which a lonely lead falls in love with his human-emulating tech assistant, are also not so much sci-fi as a meditation on how we already anthropomorphize programs like Siri and Alexa.

Human collaborations with AI in art

Is it any wonder, then, that while some were crying foul about Allen’s winning art piece, which he himself said involved generating hundreds of images and fine-tuning his favorites, others were doing what humans have done for millennia? Leaning into emerging technology, and forging exciting new working relationships with it?

For instance, on Twitter, one fellow artist quickly dove into one of humanity’s favorite pastimes: analysis. In a thread building off the hype over Allen’s prize-winning image, Jay Dragon reflected on what we might learn from the piece if we read it as we do other works of art. Dragon reflected on its composition style, discussed its title, and considered the themes and cultural resonance points it invoked. The result? A reminder that art still requires human interaction to make it so.

But another, even more compelling example emerged soon after the outcry over Allen’s win. This past weekend, fantasy writer Ursula Vernon, publishing as T. Kingfisher, shared a comic created in part with Midjourney, and talked about how difficult the process of refinement had been: how much she still had to do, that is, to make the images this tool produced match her artistic vision for the project.

The comic itself, “A Different Aftermath”, is an ode to the healthier relationships we can cultivate with nature, even amid ruin. There’s a fitting symmetry here, then, in Vernon simultaneously celebrating a healthy, exploratory relationship with AI tools.

Beneath the panic about new tech

So what’s our real crisis? What underpins all this dread and “future shock” around AI advancements that many of us are also eager to explore?

Simply this: a narrative of loss, especially with respect to economic prospects.

Because even as some “early adopters” are always going to rise up and innovate, deftly optimizing new tech for its market potential and general utility, there are also going to be plenty left behind, who painstakingly trained for and built whole careers around preceding technology and the cultural contexts it created.

We see this especially in the case of drivers in the US: a workforce complexly affected by the rise of automated machinery—but not because of the machines themselves. In these transitional years especially, what we’re seeing is employers use new software (which isn’t robust enough to handle all weather conditions, and therefore cannot do the job alone) to monitor driver activities to the point where human variation is no longer as acceptable on long commutes. The same holds true for Amazon warehouses and related delivery services in the gig economy. The technology is just advanced enough to provide customers with greater ease, but on the worker-side, it’s often used to dehumanize the people still necessary to make production run smoothly.

What’s the solution? Not playing into the narrative of inevitable loss. Not making the same, determinist mistake that stories like Guns, Germs, & Steel invite us to assume. Yes, historically, people of greater means and hardier resources very often and quite devastatingly wielded new technology and its innovations against one another.

But that was always a human choice, not an inevitable outcome.

Giving into “replacement” or “oppression” myths around AI is simply a way of offloading that choice to our creations. Some humans choose to use advanced technologies to dehumanize others. Others don’t.

And still others recognize that, wherever new technology stands ready to supplant some aspect of human industry, it behooves us to respond by building more robust political innovations to match. The tech isn’t the problem. Our civic failure to grow along with it is. Only by surmounting that failure—instead of trying to turn back the clock on invention—can we ever ensure that humans will still be able to support themselves, and each other, as we transition into all our strange new worlds ahead.

Originally published in September 2022.