Are AI art programs ripping off human artists?

Reading Time: 5 minutes Artists erupted in protest when they learned that AI art engines like Stable Diffusion were trained on their copyrighted works. This revelation has ignited a debate about the meaning of fair use.

[Previous: AI is getting scarily good]

Artificial intelligence is getting scarily good. Among other abilities, AI art engines like DALL-E 2, Midjourney and Imagen can take an arbitrary text description and create original artwork that fits the prompt. Their images range from simple to dizzyingly complex, from concrete to abstract, from cartoonish to photorealistic. In at least one case, AI-generated art won first place in a competition.

This new evolution shatters the limits of what we thought computers could do. The classic conception of the computer is a mindless golem that excels at repetitive number-crunching but is incapable of creativity. However, these art engines have an uncanny intelligence. They can match human beings, imagination for imagination, giving form and shape to any notion we can conceive of. Some even seem to have a sense of humor.

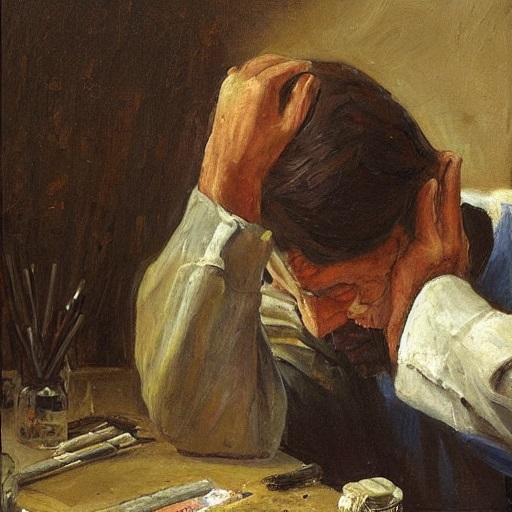

Human artists and graphic designers are worried—rightfully so—that they’ll lose their jobs to these programs. Now a new entrant in the field is stirring up a bigger controversy.

How can a computer have imagination?

In general terms, text-to-image engines are based on neural networks—software that mimics the architecture of a biological brain. Neural networks are made up of software nodes that signal each other in a complex web of connections, like neurons in the brain. They learn from experience by rewiring themselves just as organic brains do, reinforcing some connections and pruning others.

In the case of the AI art engines, these programs are trained on very large data sets: millions of human-created artworks with text captions describing them. The AI learns how to describe a new image by associating keywords with characteristics of the training data. Then, by reversing the process, the result is software that takes keywords as input and generates an image as output. (Yes, this sounds like a sci-fi trick that shouldn’t work in real life: “Reverse the polarity!“)

The latest of these digital dreammakers is called Stable Diffusion. Unlike other art engines which are restricted or closed to the public, this one is open source and free to use. You can install and run it on your home computer.

This is an amazingly cool and powerful tool, but it’s not without controversy. The closed-source art engines have filters to prevent creation of pornography, violent images, and realistic deepfakes of living people. But Stable Diffusion has no such limits.

However, a bigger outcry erupted when Stability AI, the company behind Stable Diffusion, disclosed their training set: LAION-5B, a collection of 5 billion images with descriptive captions. The vast majority were harvested from public websites, including social media like Pinterest and stock photo sites like Getty Images. This means that Stable Diffusion’s training set includes millions of copyrighted images by living artists.

Training a neural network—does it count as fair use?

For artists, the prospect of being supplanted by a computer program was bad enough. But it was worse for them to learn that these programs were based on the creative work of human beings. Some artists feel, not without reason, that this is using their own art against them.

To be clear, copyright doesn’t grant absolute control. Copyright lets you stop people from reselling your art without your consent. But it doesn’t block fair use, which means you can use someone else’s art in transformative ways, even if it’s a use the creator wouldn’t approve of.

Courts have ruled that “scraping“—mass harvesting of publicly accessible web data—doesn’t constitute illegal hacking. But this is unquestionably an area where technology has raced ahead of the law. It’s very likely that lawsuits are coming to test it. Getty Images has already banned AI-generated content in anticipation of this.

The question is whether training a neural network on copyrighted art counts as transformative fair use. This is as much a philosophical problem as a legal one.

Here’s where I’m going to plant my flag in the ground: whatever their disruptive effects, AI art programs are fair use. That’s because they’re not mindlessly copying the creative work of humans. Nor do they paste together elements of preexisting artworks, like making a collage. They’re doing something genuinely analogous to learning.

Distilling art to its essence

Stable Diffusion and other art engines don’t contain the artworks they’re trained on. The training process is like a sieve: as billions of images wash through the neural network, it learns what they have in common. It filters out irrelevant features and homes in on the relevant ones, distilling each one to its essence.

It’s true that each training image leaves an imprint on the network, in the form of subtle changes to its map of connections. But that’s only true in the same sense that the pattern of sand grains on a beach stores a trace of each wave that’s passed over them. It’s not possible to reconstruct those artworks from the structure of the network. (Stable Diffusion’s “weights” file—the map that defines the connections between software nodes—is only 4 gigabytes, far too small to incorporate the billions of images it’s trained on.)

As an analogy: how do human artists learn to create? They do it by studying existing art, both to learn principles of composition and to obtain inspiration. If I studied a work of art in depth, and afterward you performed a microscopically detailed, synapse-level scan of my brain, you might find that new connections had formed. That doesn’t mean the artist’s copyright gives them jurisdiction over the neural pathways in my brain. Still less could they claim copyright infringement over something I made that was merely inspired by their style.

I’m not saying this because I’m safe from AI automation. It’s possible that a text engine like GPT-3 could be trained on my catalogue of past writings, so it could write columns arguing for atheism (important: please, no one show this column to the OnlySky higher-ups).

If I were replaced by such a program, I’d be saddened. But I wouldn’t feel that my rights had been violated.

You can’t put the genie back in the bottle

The bigger problem is this: Even if a court ruled that programs like Stable Diffusion were a violation of creators’ rights, this technology is already out in the world. It can’t be un-invented.

The existing programs could be shut down and their source code and data deleted. However, it would still be possible to create a new art engine trained on only public domain or licensed images, and that program would still be capable of replacing human beings.

When you can’t go back, the only choice is to go forward. We need to think about how this technology can coexist with human artists.

One possibility is legislation to create a robot dividend. Artists whose work was included in the training corpus of an AI engine would be paid a small royalty whenever that engine’s art is used for commercial purposes. Perhaps that wouldn’t be sufficient, and we should consider alternatives—but that conversation has to start now, because the future that needs it is already upon us.